If you host your website on a Linux server, and have SSH access to your website then you can create an automated embedded twitch video iframe that shows your twitch channel, or who you’re hosting.

This is a simple way to support people on twitch. Plus if your website gets more views than your twitch channel then it could make a big difference.

Now I believe all browsers mute iframes automatically. To count as views the video has to be manually unmuted by the person using your website.

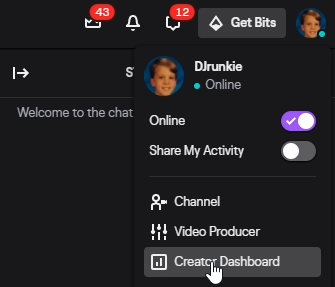

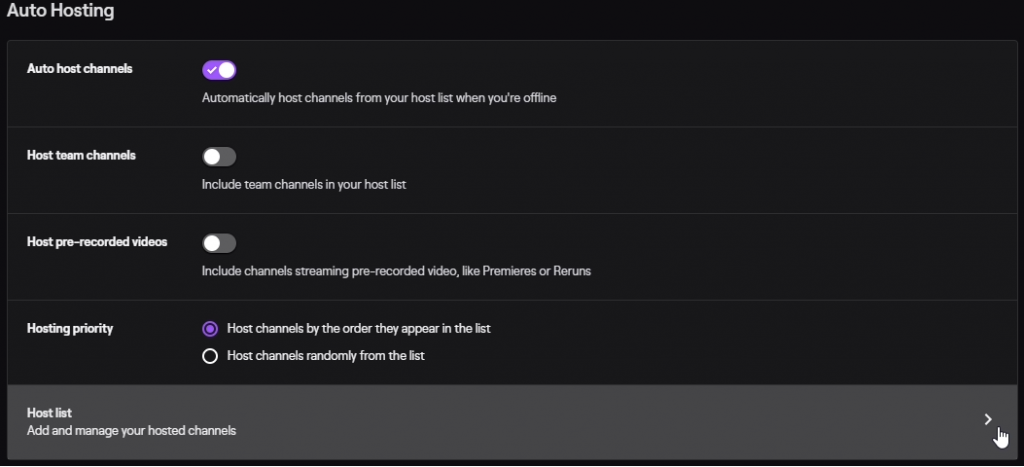

Setup autohosting on your twitch account.

Login to twitch, click on your user icon in the upper right, click on Creator Dashboard.

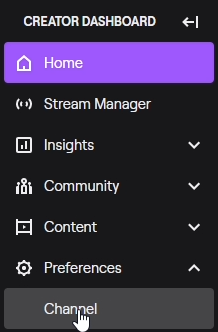

On the dashboard click on Preferences, and then Channel.

Scroll down to Auto Hosting.

Enable auto host channels, and then click on Host list. Add who ever you want to your Host list.

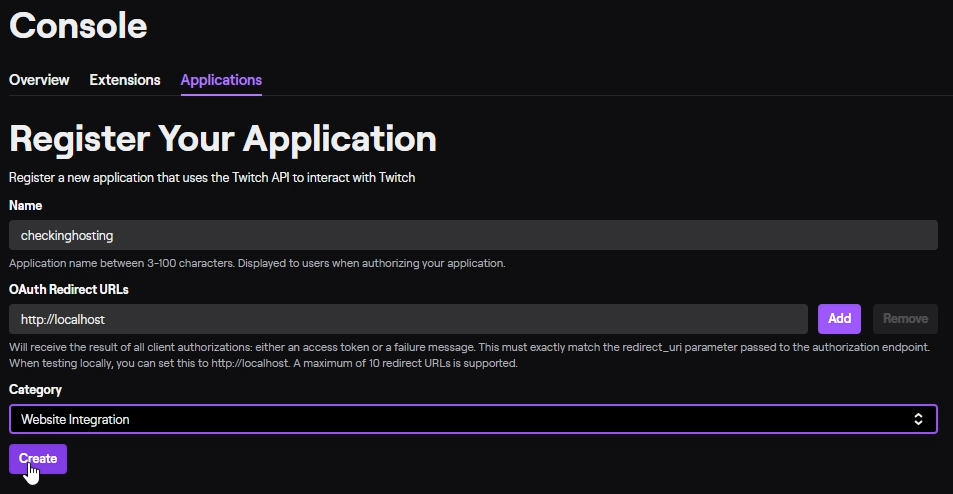

Create an app on dev.twitch.tv

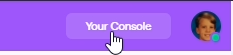

Goto https://dev.twitch.tv/login and use your twitch credentials to login, then click on Your Console.

Click on Applications.

Click on Register your Application

Enter a unique name for your application

Enter http://localhost for the OAuth Redirect URL

Choose any category, I’m using “Website Integration”

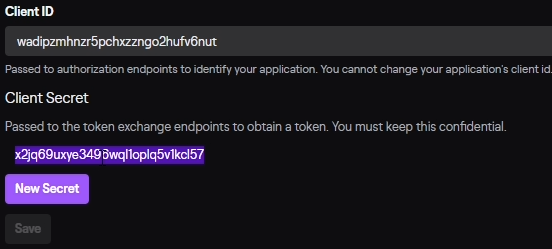

Click on the “Manage” button on your new application.

Copy your Client ID to a notepad

Ask for a New Secret and copy that as well.

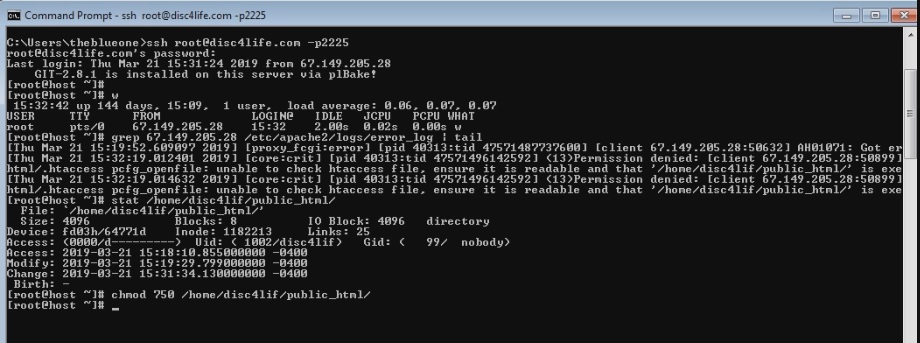

Open command prompt in Windows, or terminal in Linux and ssh into your server / website. If you’re using Windows SSH must be enabled for command prompt in Windows.

I’ve made the following code available here for easier copy and pasting. https://disc4life.com/embeddedtwitchiframe.txt

Modify this curl command using the client_id and client_secret from the step above, then run the curl request.

curl -X POST 'https://id.twitch.tv/oauth2/token?client_id=wadipzmhnzr5pchxzzngo2hufv6nut&client_secret=x2jq69uxye3496wql1oplq5v1kcl57&grant_type=client_credentials'

Note: I do recommend using the website user when logging in via SSH. I was lazy, and used root. If you’re not familiar with permissions then use the website user. In this case the website user would have been disc4lif

The access token, which is your OAuth ID, expires in about 2 months.

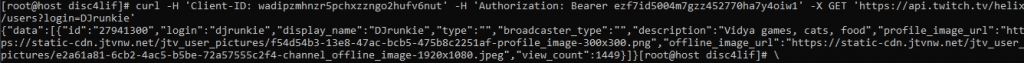

Now we take this information to get your channel id from the twitch API.

Modify this curl command with your client id, and the access token you just received.

Modify the login with your twitch name. If login=DJrunkie is not changed then you will just get the information for my channel from the twitch api.

curl -H 'Client-ID: wadipzmhnzr5pchxzzngo2hufv6nut' -H 'Authorization: Bearer ezf7id5004m7gzz452770ha7y4oiw1' -X GET 'https://api.twitch.tv/helix/users?login=DJrunkie'

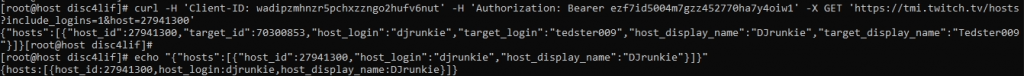

Now use the channel ID to make a request checking if we are streaming, or if we are currently hosting someone. Again replace the client id, and access token. Then replace host=27941300 with your channel ID.

curl -H 'Client-ID: wadipzmhnzr5pchxzzngo2hufv6nut' -H 'Authorization: Bearer ezf7id5004m7gzz452770ha7y4oiw1' -X GET 'https://tmi.twitch.tv/hosts?include_logins=1&host=27941300'

The top line of output is if were hosting someone, the bottom is if were streaming.

Note: Twitch will not embed video on an http site. You must have an SSL installed and use https:// on your website

And with this info we can now create a script to update an iframe on our website.

This will show an iframe on https://disc4life.com/hostingexample/index.php

vim public_html/hostingexample/index.php

<p align="left">Give my friends on twitch a view!</p>

<iframe src='https://player.twitch.tv/?channel=tedster009&enableExtensions=true&muted=false&parent=disc4life.com&parent=www.disc4life.com&player=popout&volume=.00001' frameborder="0" allowfullscreen="true" scrolling="no" height="600" width="1066"></iframe>This will show an iframe on https://disc4life.com/hostingexample/news.php

vim public_html/hostingexample/news.php

<h3 align="center">Give my friends on twitch a view!</h3>

<p align="center">

<iframe src='https://player.twitch.tv/?channel=tedster009&enableExtensions=true&muted=false&parent=disc4life.com&parent=www.disc4life.com&parent=vpn.disc4life.com&player=popout&volume=.00001' frameborder="0" allowfullscreen="true" scrolling="no" height="300" width="525"></iframe>

</p>

Note: For text editing in SSH use nano if you prefer. The text editor vim can be confusing if you’re not familiar with it.

Change channel=tedster009 to whose channel you want.

Replace disc4life.com&parent=www.disc4life.com&parent=vpn.disc4life.com with your website names.

Change the height and width to what you prefer, some 16:9 ratio, this is in pixels.

This script will update the index.php iframe with who you’re currently hosting, or your name if you’re streaming.

Modify all /home/disc4lif/public_html/hostingexample/ directory paths here with the full path to the website files the script will be updating.

Update nhosting= and istream= with your last the curl command

Update cname=djrunkie with your channel name.

vim /home/disc4lif/twitchcheck.sh

#!/bin/bash

#check for the iframe line from the file were updating, and cut out the text were interested in changing

chosting=$(grep iframe /home/disc4lif/public_html/hostingexample/index.php | cut -f3 -d= | cut -f1 -d\&)

#check the twitch api to see if this information has changed

nhosting=$(curl -H 'Client-ID: wadipzmhnzr5pchxzzngo2hufv6nut' -H 'Authorization: Bearer ezf7id5004m7gzz452770ha7y4oiw1' -X GET 'https://tmi.twitch.tv/hosts?include_logins=1&host=27941300' | cut -f14 -d\")

#we also need to check the twitch api to see if we are streaming.

istream=$(curl -H 'Client-ID: wadipzmhnzr5pchxzzngo2hufv6nut' -H 'Authorization: Bearer ezf7id5004m7gzz452770ha7y4oiw1' -X GET 'https://tmi.twitch.tv/hosts?include_logins=1&host=27941300' | cut -f6 -d\")

#our channel name

cname=djrunkie

#If were currently streaming, and the chosting value does not equal our twitch name, then update chosting with our twitch name in our files. Otherwise if chosting already = nhosting, or nhosting is blank then exit because either its already set, or were not hosting anyone currently. Otherwise chosting needs to be updated with nhosting.

if [[ "$istream" = host_login ]] && [[ "$chosting" != "$cname" ]]

then

sed -i "s/channel=$chosting/channel=$cname/g" /home/disc4lif/public_html/hostingexample/index.php

sed -i "s/channel=$chosting/channel=$cname/g" /home/disc4lif/public_html/hostingexample/news.php

elif [[ "$chosting" = "$nhosting" ]] || [[ "$nhosting" = '' ]]

then

echo "They're the same dude, or nhosting was blank"

exit

else

sed -i "s/channel=$chosting/channel=$nhosting/g" /home/disc4lif/public_html/hostingexample/index.php

sed -i "s/channel=$chosting/channel=$nhosting/g" /home/disc4lif/public_html/hostingexample/news.php

fiCreate a script that grabs a new OAuthID token, and updates the token in the twitchcheck.sh file.

Modify both directory paths here with the full path to the twitchcheck.sh script.

Update ntoken= with your first curl command

vim newtoken.sh

#!/bin/bash

#get the current token from the twitchcheck script we just created

ctoken=$(grep -m 1 'Bearer' /home/disc4lif/twitchcheck.sh | cut -f4 -d\' | awk {'print $3'})

#get a new token using our client_id and client_secret from our twitch application

ntoken=$(curl -X POST 'https://id.twitch.tv/oauth2/token?client_id=wadipzmhnzr5pchxzzngo2hufv6nut&client_secret=x2jq69uxye3496wql1oplq5v1kcl57&grant_type=client_credentials' | cut -f4 -d\")

#replace the current token with the new token

sed -i "s/$ctoken/$ntoken/g" /home/disc4lif/twitchcheck.shNow make a crontab executing these scripts. The first one I run every five minutes. The second one I run once a month every month.

Replace /home/disc4lif/ with the path to your scripts.

crontab -e

*/5 * * * * sh /home/disc4lif/twitchcheck.sh

* 2 7 */1 * sh /home/disc4lif/newtoken.shNow you have an embedded twitch iframe video on your website that updates automatically according to if you’re streaming, or if you’re hosting someone.

Check out the video if you’re confused about any part of the process!